Strengthening the performance of Gen AI Models With Neo4j Graph Database

Customers often purchase a separate Vector Database for efficient operation of generative AI. One alternative to such Vector DBs is Neo4j’s solution. The graph database platform of Neo4j effectively integrates with cloud-based generative AI platforms like Microsoft Azure, Google Cloud, AWS, and more. This allows businesses to enhance the performance of generative AI models, improve pattern recognition, and support users in exploring data in natural language.

In particular, Neo4j strengthens its strategic partnership with Google Cloud by releasing products integrated with Google Cloud Vertex AI features. This enables enterprises to create more value and impact with data and LLM (Large Language Models) through features like real-time reinforcement and grounding, pattern identification in large datasets, and exploring data in natural language.

This post aims to illustrate how the integration between Google Cloud Vertex AI and Neo4j Graph Database is achieved.

Why should you use generative AI to build knowledge graphs?

Enterprises struggle to extract value from vast amounts of data. While structured data is provided in various formats through well-defined APIs, unstructured data found in documents, engineering drawings, case sheets, and financial reports can be more challenging to integrate into a comprehensive knowledge management system.

With Neo4j, you can build a knowledge graph from both structured and unstructured sources. Modeling this data as a graph allows you to gain insights that may be hard to obtain in other ways. Graph data can be massive and messy to handle, but with Google Cloud’s generative AI, you can easily construct a knowledge graph in Neo4j and then interact with it using natural language.

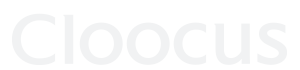

The architecture diagram below illustrates how Google Cloud and Neo4j collaborate to build a knowledge graph and facilitate interaction:

The diagram shows two data flows:

- Knowledge extraction – On the left side of the diagram, blue arrows show data flowing from structured and unstructured sources into Vertex AI. Generative AI is used to extract entities and relationships from that data which are then converted to Neo4j Cypher queries that are run against the Neo4j database to populate the knowledge graph. This work was traditionally done manually with handcrafted rules. Using generative AI eliminates much of the manual work of data cleansing and consolidation.

- Knowledge consumption – On the right side of the diagram, green arrows show applications that consume the knowledge graph. They present natural language interfaces to users. Vertex AI generative AI converts that natural language to Neo4j Cypher that is run against the Neo4j database. This allows non technical users to interact more closely with the database than was possible without generative AI

We’re seeing this architecture come up again and again across verticals. Some examples include:

- Healthcare – Modeling the patient journey for multiple sclerosis to improve patient outcomes

- Manufacturing – Using generative AI to collect a bill of materials that extends across domains, something that wasn’t tractable with previous manual approaches

- Oil and gas – Building a knowledge base with extracts from technical documents that users without a data science background can interact with. This enables them to more quickly educate themselves and answer questions about the business.

Now that we have obtained a general overview of where this technology can be applied, let’s focus on a specific case.

Dataset and architecture

In this example, we will use the generative AI capabilities of Vertex AI to parse documents from the Securities and Exchange Commission (SEC). Asset managers overseeing assets of over $100 million are required to submit Form 13 quarterly. Form 13 describes their holdings.

We will build a knowledge graph from entities that represent the information extracted, showing the holdings shared among different asset managers.

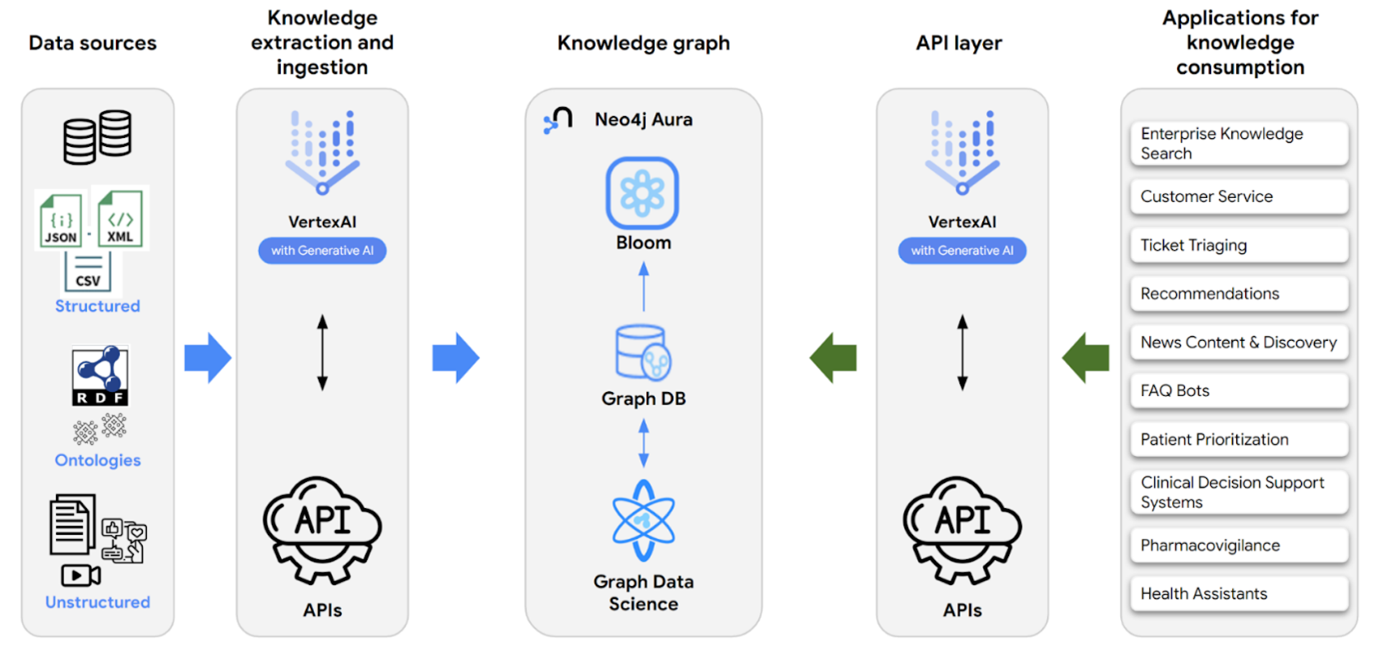

The architecture to perform this task is a specific version of the architecture we saw earlier.

In this case, we have just one data source, rather than many. The data all comes from semi-unstructured text in the Form 13 filings. The documents are a rather odd mix of text and XML unique to the SEC’s EDGAR system. As such, a convenient shortcut to parsing them is quite useful.

Once we’ve built the knowledge graph, we’ll use a Gradio application to interact with it using natural language.

Knowledge Extraction

Neo4j is a flexible schema database that allows you to bring in new data and related schemas, connect them to existing ones, or iteratively modify the existing schema based on the use case.

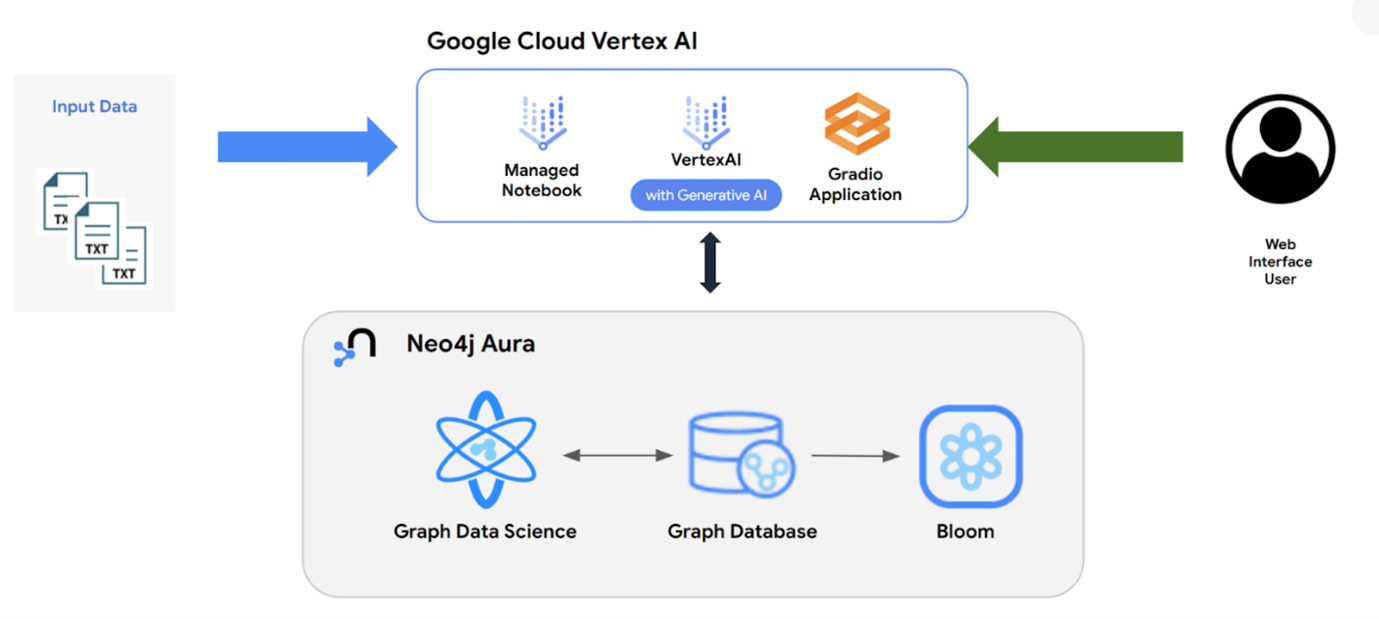

Here is a schema representing the dataset:

To transfer unstructured data to Neo4j, we must first extract the entities and relationships. This is where generative AI foundation models like Google’s PaLM 2 can help. Using prompt engineering, the PaLM 2 model can extract relevant data in the format of our choice. In our chatbot example, we can chain multiple prompts using PaLM 2’s “text-bison” model, each extracting specific entities and relationships from the input text. Chaining prompts can help us avoid token limitation errors.

The prompt below can be used to extract company and holding information as JSON from a Form13 document:

mgr_info_tpl = “””From the text below, extract the following as json. Do not miss any of this information.

* The tags mentioned below may or may not be namespaced. So extract accordingly. Eg: <ns1:tag> is equal to <tag>

* “name” – The name from the <name> tag under <filingManager> tag

* “street1” – The manager’s street1 address from the <com:street1> tag under <address> tag

* “street2” – The manager’s street2 address from the <com:street2> tag under <address> tag

* “city” – The manager’s city address from the <com:city> tag under <address> tag

* “stateOrCounty” – The manager’s stateOrCounty address from the <com:stateOrCountry> tag under <address> tag

* “zipCode” – The manager’s zipCode from the <com:zipCode> tag under <address> tag

* “reportCalendarOrQuarter” – The reportCalendarOrQuarter from the <reportCalendarOrQuarter> tag under <address> tag

* Just return me the JSON enclosed by 3 backticks. No other text in the response

Text:

$ctext

“””

The output from text-bison model is then:

{‘name’: ‘TIGER MANAGEMENT L.L.C.’,

‘street1’: ‘101 PARK AVENUE’,

‘street2’: ”,

‘city’: ‘NEW YORK’,

‘stateOrCounty’: ‘NY’,

‘zipCode’: ‘10178’,

‘reportCalendarOrQuarter’: ’03-31-2023′}

The text-bison model was able to understand the text and extract information in the output format we wanted. Let’s take a look at how this looks in Neo4j Browser.

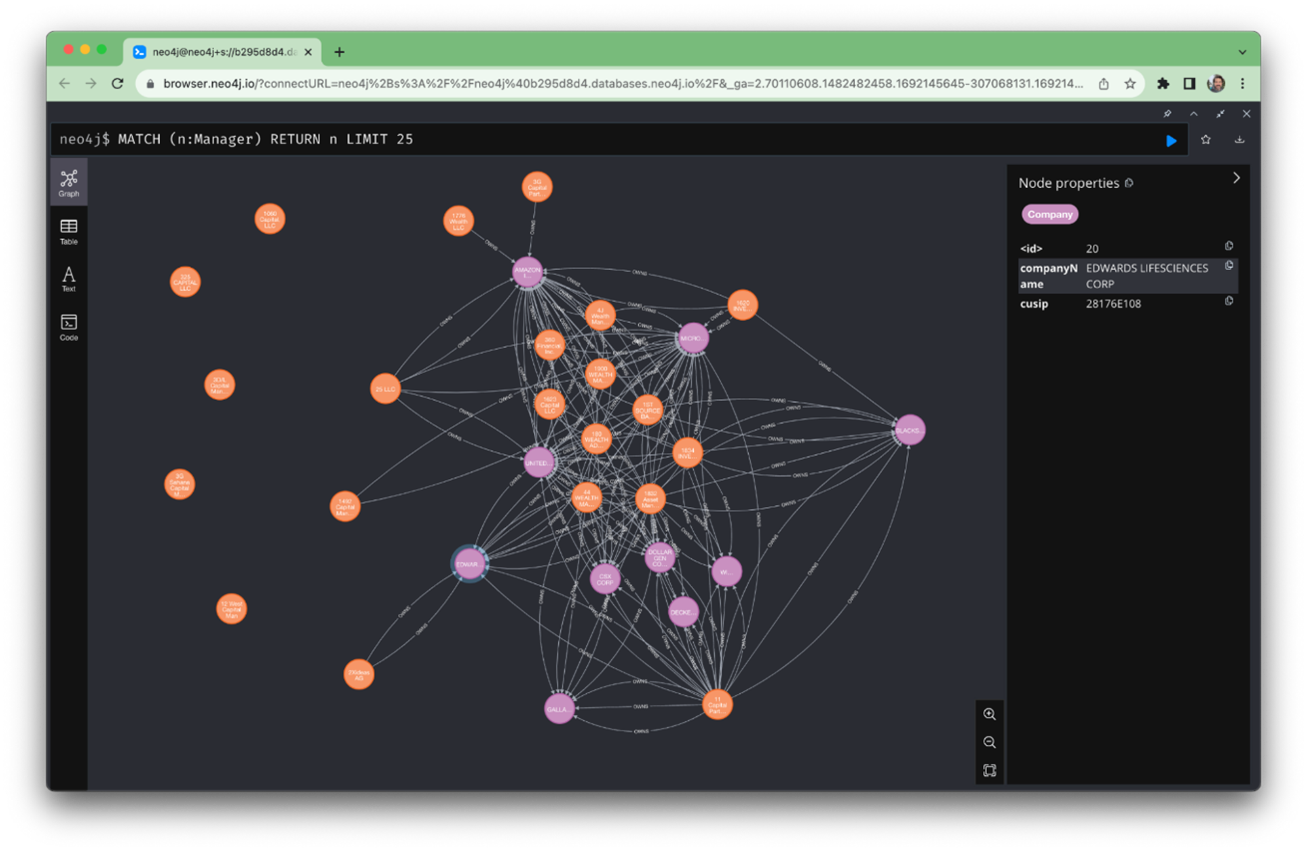

The screenshot above shows the knowledge graph that we built, now stored in Neo4j Graph Database.

We’ve now used Vertex AI generative AI to extract entities and relationships from our semistructured data. We wrote those into Neo4j using Cypher queries created by Vertex AI. These steps would previously have been manual. Generative AI helps automate them, saving time and effort.

Knowledge consumption

Now that we’ve built our knowledge graph, we can start to consume data from it. Cypher is Neo4j’s query language. If we are to build a chatbot, we have to convert the input natural language, English, to Cypher. Models like PaLM 2 are capable of doing this. The base model produces good results, but to achieve better accuracy, we can use two additional techniques:

- Prompt Engineering – Provide a few samples to the model input to achieve the desired output. We can also try chain of thought prompting, to teach the model how to achieve a certain Cypher output. .

- Adapter Tuning (Parameter Efficient Fine Tuning) – We can also adapter tune the model using sample data. The weights generated this way will stay within your tenant.

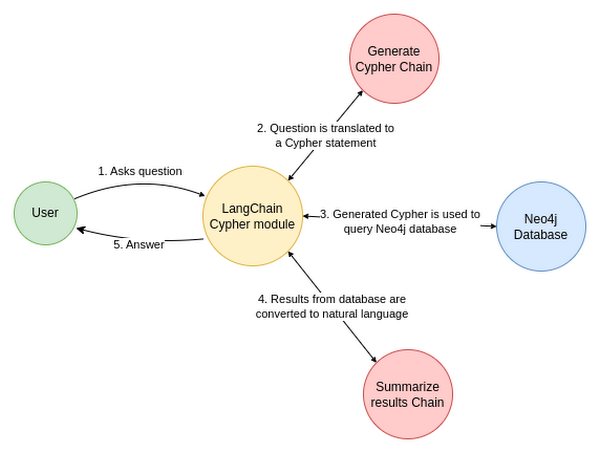

The data flow in this case is then:

Using a tuned model, you can convert a simple prompt like the following into Cypher queries with the expertise of text-bison:

#prompt/template

CYPHER_GENERATION_TEMPLATE = “””You are an expert Neo4j Cypher translator who understands the question in english and convert to Cypher strictly based on the Neo4j Schema provided and following the instructions below:

- Generate Cypher query compatible ONLY for Neo4j Version 5

- Do not use EXISTS, SIZE keywords in the Cypher. Use alias when using the WITH keyword

- Please do not use the same variable names for different nodes and relationships in the query.

- Use only Nodes and relationships mentioned in the schema

- Always enclose the Cypher output inside 3 backticks

- Always do a case-insensitive and fuzzy search for any properties related search. Eg: to search for a Company name use `toLower(c.name) contains ‘neo4j’`

- Candidate node is synonymous to Manager

- Always use aliases to refer the node in the query

- ‘Answer’ is NOT a Cypher keyword. Answers should never be used in a query.

- Please generate only one Cypher query per question.

- Cypher is NOT SQL. So, do not mix and match the syntaxes.

- Every Cypher query always starts with a MATCH keyword.

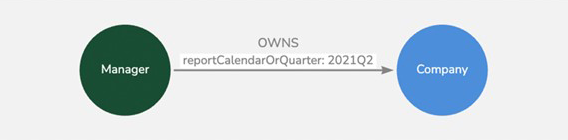

Vertex AI generative AI is then able to respond to a question like “Which managers own FAANG stocks?” like this in Cypher:

MATCH (m:Manager) -[o:OWNS]-> (c:Company) WHERE toLower(c.companyName) IN [“facebook”, “apple”, “amazon”, “netflix”, “google”] RETURN m.managerName AS manager

This is kind of amazing. Vertex AI has understood what FAANG means, mapped that to company names and then created a Cypher query based on that. The final answer is “Beacon Wealthcare LLC and Pinnacle Holdings, LLC own FAANG stocks.”

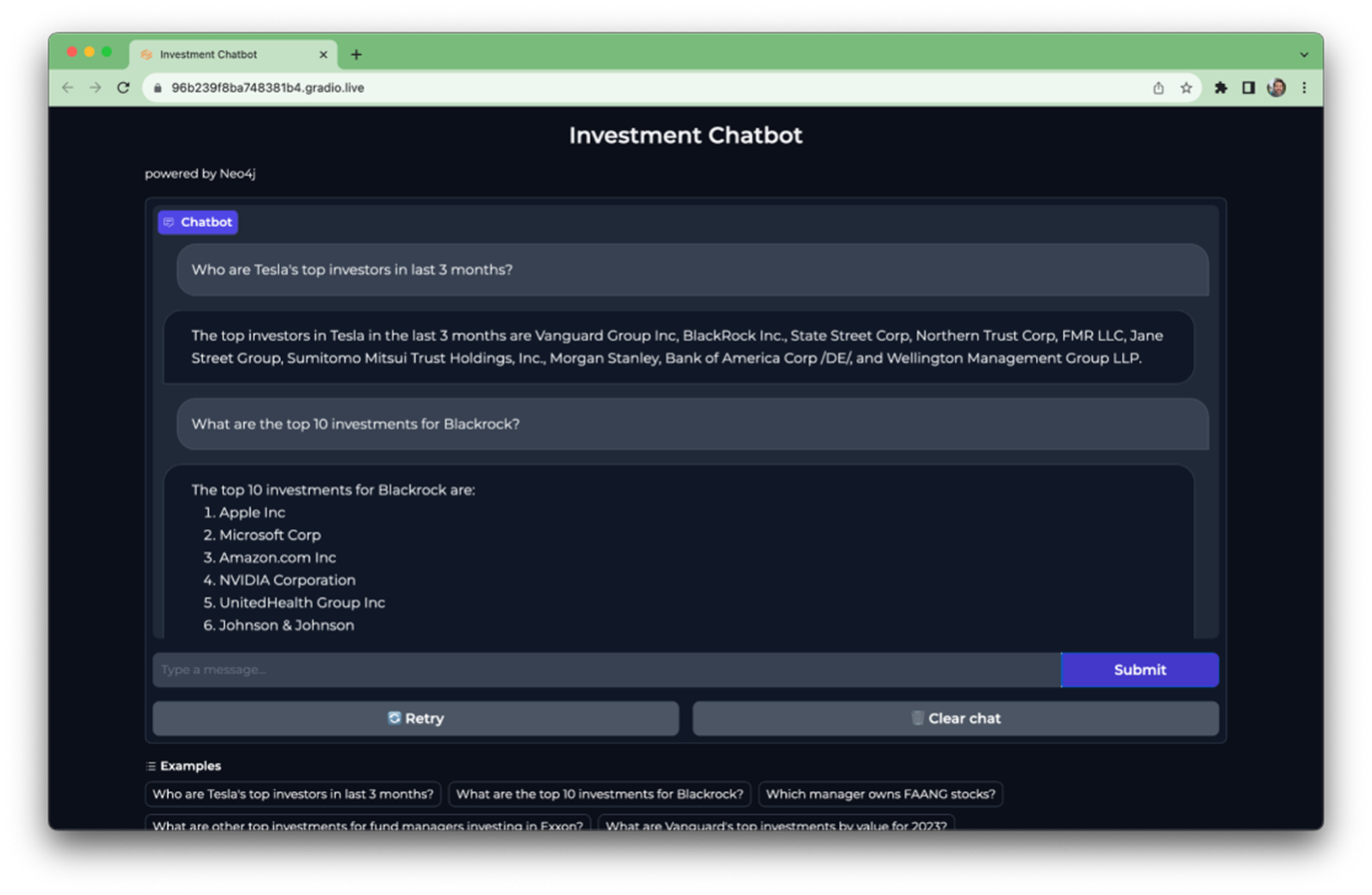

Gradio even provides a nice chatbot widget to wrap this all up.

This chatbot includes several example questions to help you get started.

Summary

In this blog post, we walked through a two part data flow:

- Knowledge Extraction – Taking entities and relationships from our semistructured data and building a knowledge graph from it.

- Knowledge Consumption – Enabling a user to ask questions of that knowledge graph using natural language.

In each case, what made it possible was the unique combination of generative AI capabilities in Google Cloud Vertex AI and Neo4j. This approach automates and simplifies processes that were previously very manual. It enables the application of the knowledge graph approach to a class of problems where it was not feasible before.

Neo4j and Cloocus

Neo4j, a leader in graph databases and analytics, empowers enterprises to uncover hidden relationships and patterns in billions of connected data quickly and easily. Customers leverage the structure of connected data to propose innovative solutions to pressing business problems such as fraud detection, customer 360, knowledge graphs, supply chain optimization, personalization, IoT, and network management, as data volumes grow. Neo4j’s comprehensive graph stack provides robust foundational graph storage, data science, advanced analytics, and visualization through enterprise-grade security controls, scalable architecture, and ACID compliance. The Neo4j data leadership community, a vibrant open-source community composed of over 250,000 developers, data scientists, and architects, spans across hundreds of Fortune 500 companies, government agencies, and NGOs.

Cloocus, through its partnership with Neo4j, supports customers in harnessing generative AI for better innovation. Cloocus quickly acquires expertise in the cutting-edge generative AI technology through its skilled group of Data & AI professionals. If you need expert consultation regarding the adoption of generative AI, feel free to request expert consulting through the button below!

If you need consulting services for cloud-based data and

artificial intelligence, feel free to contact Cloocus!!

Related Posts

Cloocus Corp.

[United States] 500 7th Ave. Fl 8 New York, NY 10018 | Tel.+1 408.7722024 | E-mail.info_us@cloocus.com

[Malaysia] A-3A, Block A, Level 3A, Sunway PJ51A, Jalan SS9A/19, Seri Setia, 47300 Petaling Jaya. | Tel.+6016 331 5396 | E-mail.infoMY@cloocus.com

[Korea Headquarter] 6, Nonhyeon-ro 75-gil, Gangnam-gu, Seoul, Republic of Korea 06247 | Tel.02-597-3400 | E-mail.marketing@cloocus.com

[Korea Busan Campus] 55, Centum jungang-ro, Haeundae-gu, Busan, Republic of Korea | Tel.051-900-3400

Copyrights 2024 Cloocus co.,ltd. all rights reserved.