Azure AI content governments in AI era

Earlier this year, the Centralia Development was decided to introduce generation AI to introduce generation AI.However, before introducing new technology, the introduction of new technology has become responsible for the introduction of new technology.The key of the concern was to potentially protect students from inappropriate and harmful content.The output of large language model LM) is because the output is learned from a vast amount of Internet data that did not go through filtering.

Simon Chitman, Simon Chitman, who can apply to education in education, is extremely limited to education.I said how students are not a guide for interaction, “There is no guide.”

Azure AI Content Safety provides guardrails that made it possible for the South Australia Department for Education to deploy EdChat in a pilot project across eight schools. The AI-powered chatbot can help students do research and educators do lesson planning. Photo courtesy of South Australia Department for Education.

Nevertheless, the Digital Art Department finally ends the pilot project of Azure-based AI chatbot.Almost 1,500 teachers and 150 teachers and 150 teachers from the contents of the curriculum, including the story of John Starb’s novel “Life and human” from the contents of cell division.Microsoft system has received high scores from “Azure AI Content AI Content” that helps organizations create safer online environment, which helps organizations create safer online environment.

Simon Chapman said that the safety function built in AI bonds, blocked input query and filtering harmful response to the educational query, rather than content supervisors, rather than content director content director.

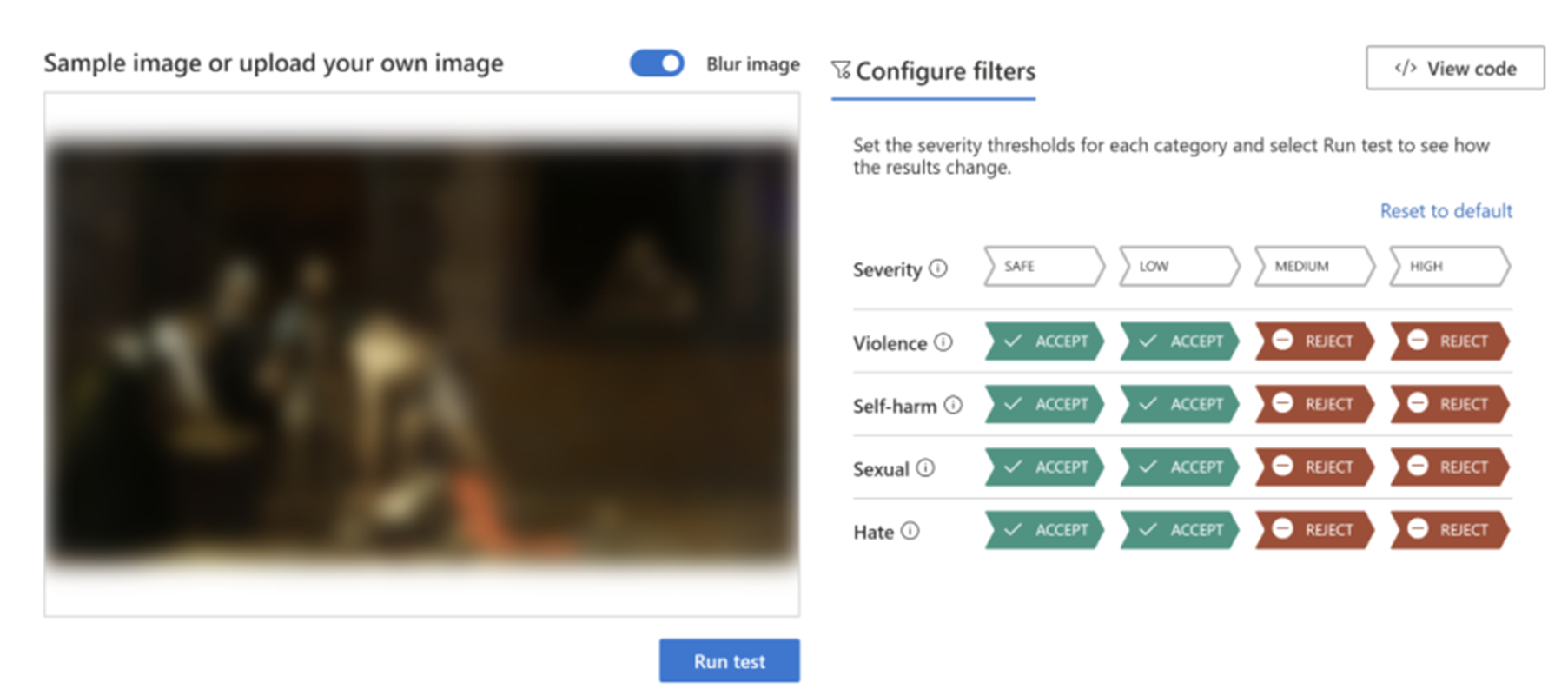

Through Microsoft’s AI Content AI Content, which Microsoft is developed by Microsoft has developed by Microsoft is helping users to review hate, sexual violence, sexual and self-esteem, sexual and content.If advanced language and vision model detects harmful content, the expected serious content is displayed as points.Through this, companies and organizations may block services depending on policies or specify the platform.

Well, the “Zure AI content safety” was introduced as part of AI services, but now you can use as a standalone system.This means that customers can be used as part of the content system, but also can be used as part of the content system created by the user model.Microsoft suggests that usefulness can expand the usefulness through these content utilization process.

Microsoft’s vice president Eric Boyd, said, “We aim to provide tools that Microsoft will provide tools necessary tools to provide more safely distributing AI.Now we’ve already released a more extensive business requirements, saying that companies are watching the amazing ability to create an independent production AI content.

This is only an example of how Microsoft has been practicing the principle of making AI to make responsible for making AI.For the past few years, Microsoft promised to support governance of AI (Respon AI safety pledge” in the U.S. White House. White House.For responsible AI to provide product development information, expanding the boundary of AI research, and testing standards for AI systems design, and testing.As consistent performance for this, as consistent performance of AI dashboards, which is responsible for customers.

Product Manager Sarah, which is leading AI in Microsoft, is responsible for AI innovation store, but it is the core of AI innovation store.We want to provide the best tools to solve these responsibility,” adding, “Azure AI Content AI Content AI Content AI content,” adding, adding, “Azure AI application AI applications.”

Customizable for different use cases

Internally, Microsoft has relied on Azure AI Content Safety to protect users of its own AI-powered products. Microsoft says this technology has been essential to responsibly roll out chat-based innovation in products such as Bing, GitHub Copilot, Microsoft 365 Copilot, and Azure Machine Learning.

In a world full of content, responsible AI issues are business- and industry-wide issues, so the main benefit of Azure AI Content Safety is the flexible configurability to tailor policies to each customer’s specific environment and use case. For example, gaming-focused platforms are likely to set different standards for violent language than school education.

With Azure AI Content Safety’s image moderation tool, customers can easily run tests on images to ensure that they meet their content standards. Courtesy of Microsoft.

Building trust in the AI era

As generative AI continues to spread, potential threats to the online space are expected to increase.

Microsoft will continue to improve its technology through research and customer feedback to prepare for these threats, Sarah Bird said. Continuous work on multimodal models (LMMs), for example, will enhance the detection of potentially unpleasant combinations of images and text (think memes) that may be missed in individual inspections.

Sarah Bird noted that Microsoft has handled content moderation for decades, creating tools to help protect users everywhere from Xbox forums to Bing. Azure AI Content Safety is based on Azure Content Modulator, a previous service that enabled these environments and customers to take advantage of content discovery capabilities, and leverages powerful new language and vision models.

Microsoft hopes that by thinking about overall safety, customers will be able to adopt a broad range of trusted AI. Eric Boyd said, “Trust has been a cornerstone of the Microsoft brand and Azure from the beginning. As we enter the AI era, we continue to expand how we protect our customers.”

Related Posts

Cloocus Corp.

[United States] 500 7th Ave. Fl 8 New York, NY 10018 | Tel.+1 408.7722024 | E-mail.info_us@cloocus.com

[Malaysia] A-3A, Block A, Level 3A, Sunway PJ51A, Jalan SS9A/19, Seri Setia, 47300 Petaling Jaya. | Tel.+6016 331 5396 | E-mail.infoMY@cloocus.com

[Korea Headquarter] 6, Nonhyeon-ro 75-gil, Gangnam-gu, Seoul, Republic of Korea 06247 | Tel.02-597-3400 | E-mail.marketing@cloocus.com

[Korea Busan Campus] 55, Centum jungang-ro, Haeundae-gu, Busan, Republic of Korea | Tel.051-900-3400

[Japan] ARK Hills Front Tower, 2-23-1 Akasaka, Minato-ku, Tokyo | Tel.+81 3.5575.7808 | E-mail.infoJP@cloocus.com

Copyrights 2024 Cloocus co.,ltd. all rights reserved.